YoungJae Kim, Yechan Park, Seungheon Han, Jooyong Yi

Two Key Questions

What to observe?

How to guide the search based on the observation?

What to Observe?

Critical branch: a branch whose hit count changes before and after an interesting patch is applied

What to Observe?

Critical branch: a branch whose hit count changes before and after an interesting patch is applied

Positive critical branch: a critical branch whose hit count increases

Negative critical branch: a critical branch whose hit count decreases

How to Guide the Search?

We again traverse the patch-space tree using the multi-armed bandit model.

We choose an edge that is more likely to lead to a patch candidate that behaves similarly to the interesting patches found earlier during the repair process.

Count-based Similarity of Patch Behavior

Suppose that an interesting patch is found earlier during the repair process.

Assume involves a positive critical branch .

Patch is considered similar to an interesting patch if

the hit count of increases after applying

Our Greybox Guidance Policy

We choose an edge that is more likely to lead to a patch candidate that shows count-based similarity to the interesting patches found earlier during the repair process.

Blackbox vs Greybox

Evaluation (D4J v1.2; 10 times repetitions)

Results on Recalling Correct Patches

Top 1

Top 5

Part 3: Patch Verification

Cast a Wide Net

APR

≈

process of searching for plausible patches

+

select a correct patch

Seungheon Han, YoungJae Kim, Yeseung Lee, Jooyong Yi

Automated Vulnerability Repair

Our Approach

Bounded verification via symbolic execution

Function-level verification to avoid the reachability problem

PoC-centered verification

Under-Constrained Symbolic Execution

structnode {int data;

structnode* next;

};

intlistSum(struct node* n) {

int sum = 0;

while (n) {

sum += n->data;

n = n->next;

}

return sum;

}

Under-Constrained Symbolic Execution

Limitation of UC-SE

intlistSum(struct node* n) {

int sum = 0;

int i = 1; // addedwhile (n) {

sum += n->data;

n = n->next;

i *= 2; // added

}

g = arr[i]; // addedreturn sum;

}

PoC-Centered Bounded Patch Verification

UC-SE vs. SymRadar

Patch Verification

Patch Classification Rubric

Key Requirements for Patch Verification

Detecting as many incorrect plausible patches as possible (high specificity)

Preserving as many correct patches as possible (high recall)

Using black-box fuzzing is like firing a machine gun while blindfolded.

White-box fuzzing is like using a sniper rifle. Each shot is slow but, but it hits a new path every time.

Let me first show you a snippet of the results. We applied our lightgrey-box fuzzing named PathFinder to a well-known deep learning library, PyTorch. These plots show how branch coverage increases over time. Clearly, our approach overwhelmingly outperforms the existing SOTA tools using various approaches.

Moreover, as the exploration proceeds, the path conditions can become more precise since more data points for synthesis are available.

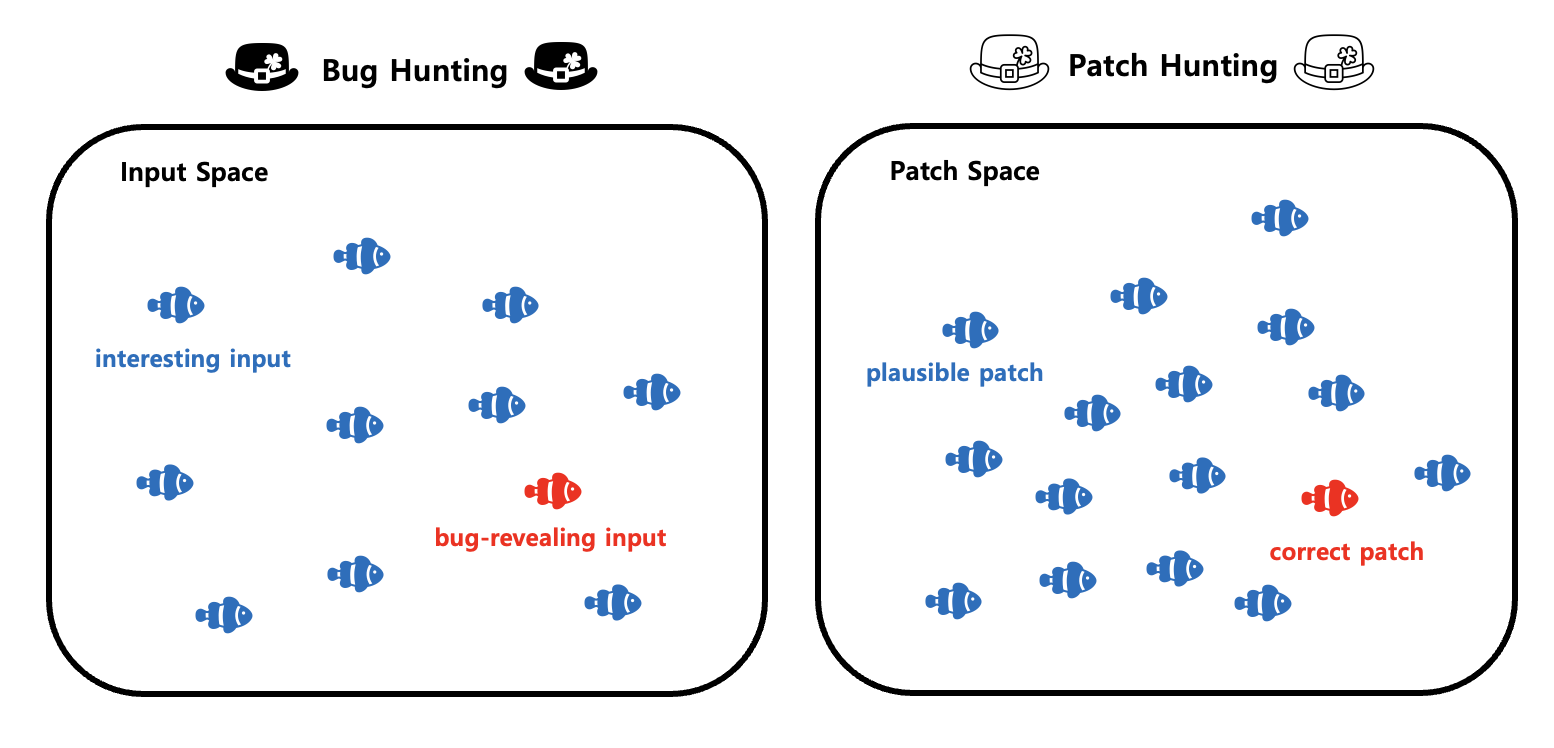

# Bug Hunting and Patch Hunting

---

Our situation can be specifically modeled as a Bernoulli bandit problem. We need to speculate the probability of success of each arm. And each arm can have a different probability of success.

The Bernoulli bandit problem can be solved by the Thompson sampling algorithm that works in the following three steps. First, for each arm $k$, we sample $\theta_k$ from its distribution. Let's say we are about to choose between method 1 and method 2. Let's assume that the left arm is associated with this Beta distribution and the right arm is associated with that Beta distribution. It is likely that a higher value is sample from the right arm, in which case we choose the right arm. However, note that Thompson sampling still allows to choose the left arm with a smaller probability.

- Distribution of $\theta_k$: Beta distribution $(\alpha_k, \beta_k)$

| $Beta(\alpha=2,\beta=2)$ | $Beta(\alpha=3,\beta=2)$ | $Beta(\alpha=5,\beta=2)$ |

| --- | --- | --- |

|  |  |  |

What if we find an interesting patch? Then, we update the distributions of the corresponding edges. For example, if this was the distribution of this edge before the update, its right-hand side one shows the distribution after the update. Notice that the distribution after the update is more left skewed, indicating that selecting this edge looks more promising than before.

Then the natural question that arises is: Can we invent a grey-box approach that performs better than the black-box approach?

---

# Blackbox Guidance Policy

- While traversing the patch-space tree, this policy gives higher priority to edges that are more likely to lead to the discovery of interesting patches.

- Note that the only runtime information used in this policy is whether a test passes or fails after applying a patch.

Each edge is associated with critical branches. These critical branches are obtained after executing interesting patches observed in the corresponding subtree. Unlike in the black-box approach, we assign a beta distribution to each critical branch.